How to Create a Podcast Summary w/ ChatGPT & Whisper AI API

2023-12-03

How to Create a Podcast Summary with the ChatGPT API + Whisper AI API

There are a ton of web application out there summarize podcasts but they often cost money.

If you're a more thrifty individual, there's a more cost-effective solution - the ChatGPT and Whisper AI API. These tools enable you to automate podcast summaries efficiently and economically.

Now this will take a bit of coding but if you know how to copy and paste, your 90% of the way there.

Let's get into it.

Step 1: Transforming Audio to Text with Whisper AI

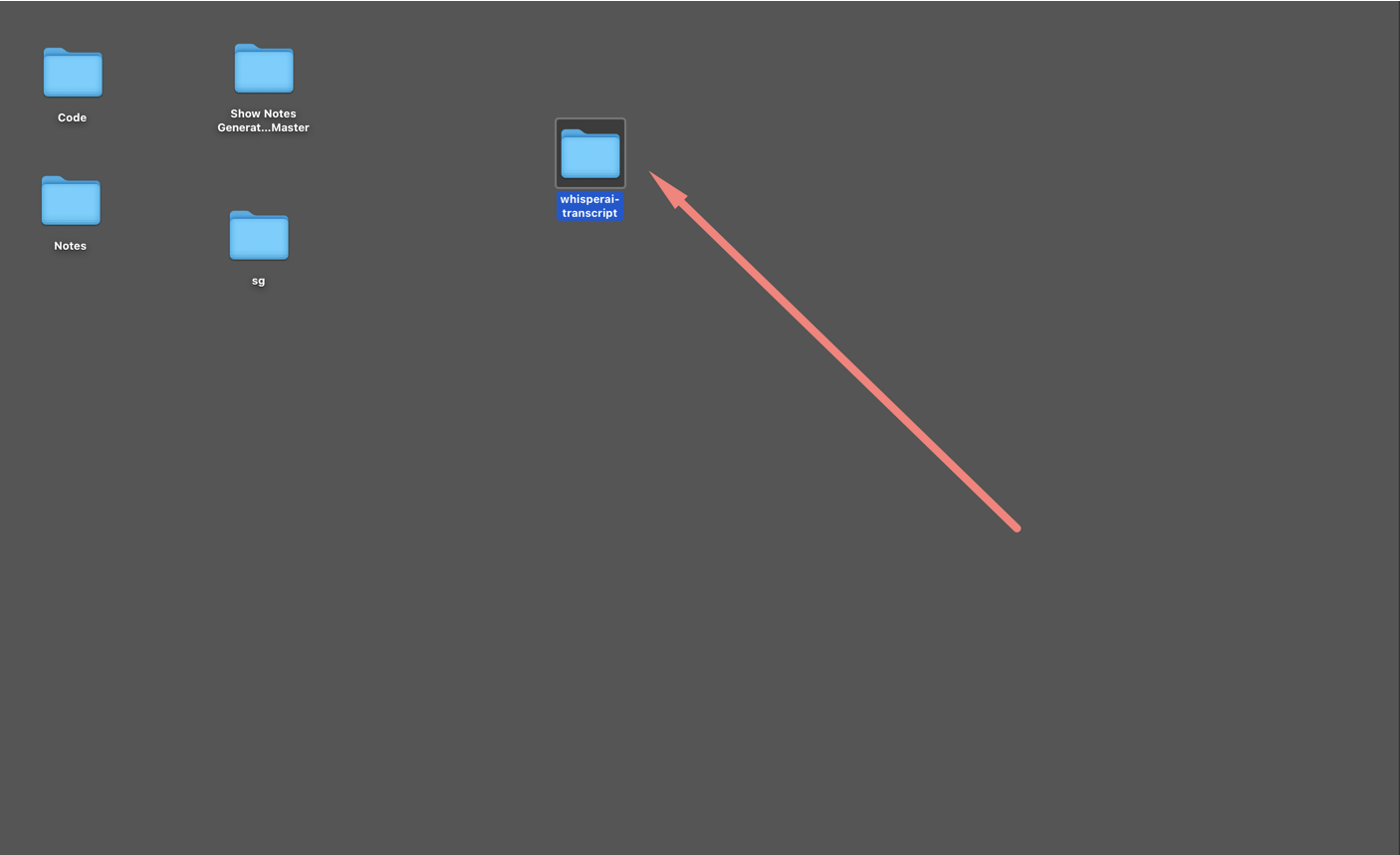

To kick things off, create a new folder on your desktop. You can call it what ever you like.

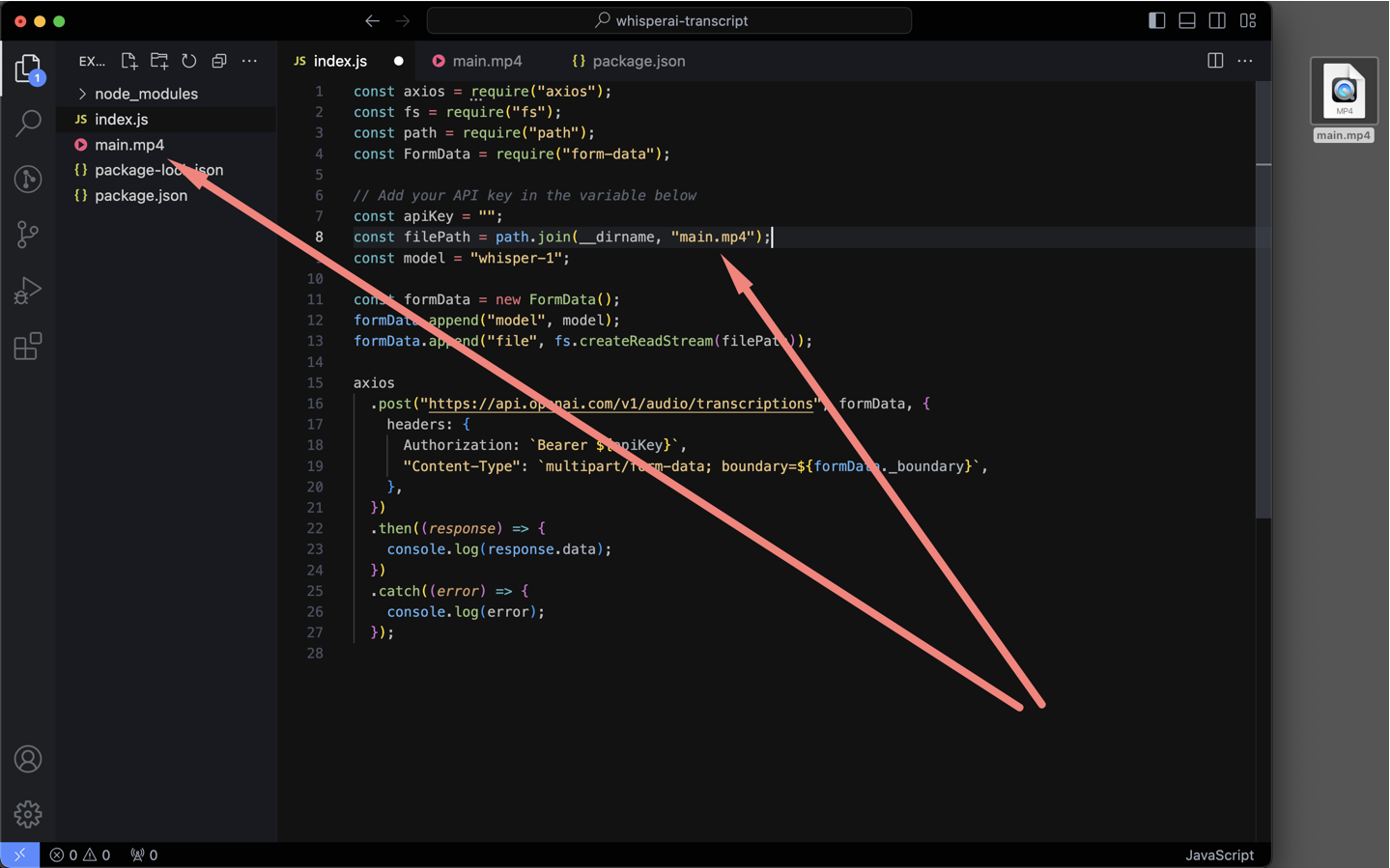

Next open the folder in VS Code and create a file named index.js. If you're new to VS Code, it's a free code editor you can download here.

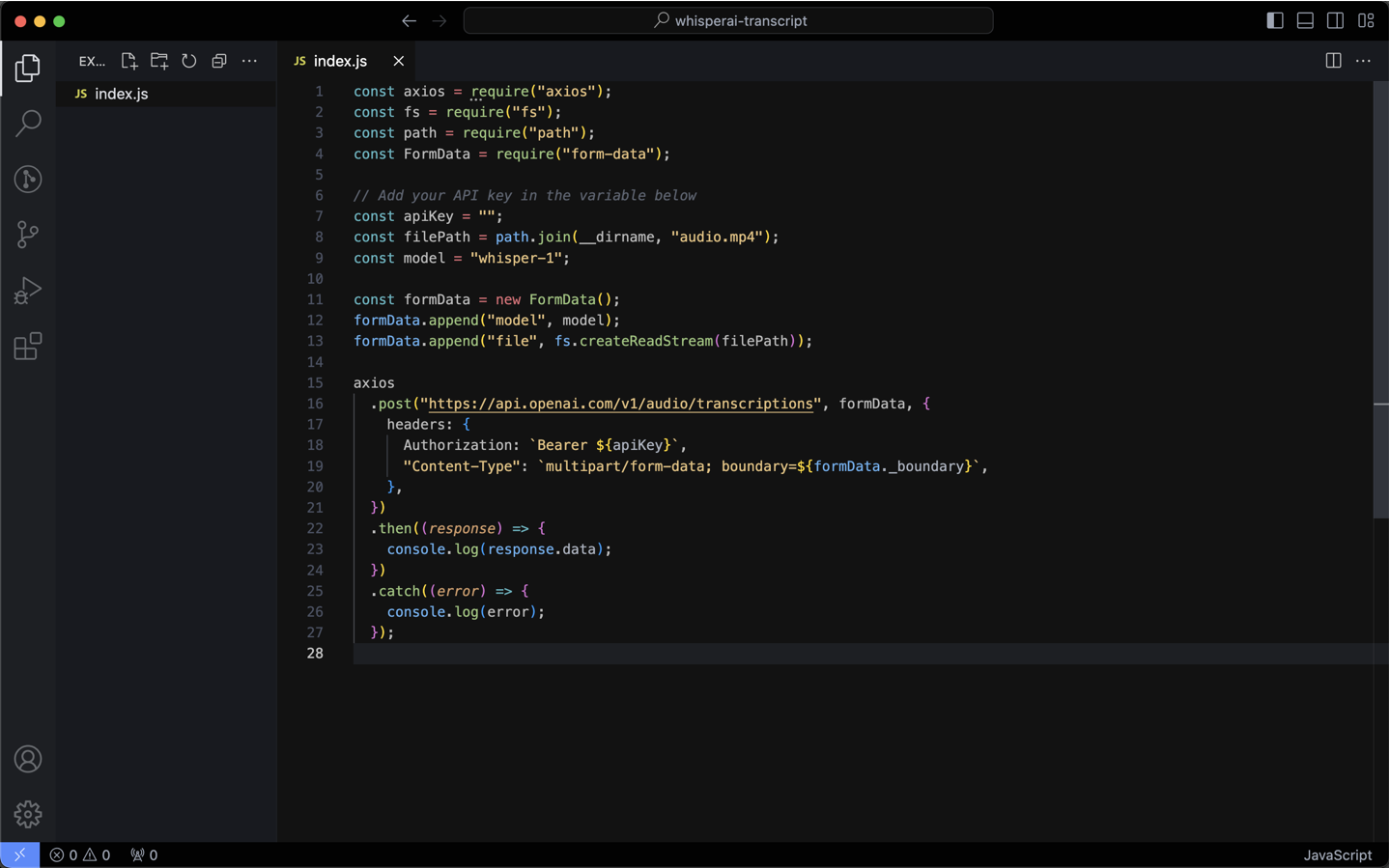

Copy and paste this code into the index.js file:

const axios = require("axios");

const fs = require("fs");

const path = require("path");

const FormData = require("form-data");

// Add your API key in the variable below

const apiKey = "";

const filePath = path.join(__dirname, "audio.mp4");

const model = "whisper-1";

const formData = new FormData();

formData.append("model", model);

formData.append("file", fs.createReadStream(filePath));

axios

.post("https://api.openai.com/v1/audio/transcriptions", formData, {

headers: {

Authorization: `Bearer ${apiKey}`,

"Content-Type": `multipart/form-data; boundary=${formData._boundary}`,

},

})

.then((response) => {

console.log(response.data);

})

.catch((error) => {

console.log(error);

});

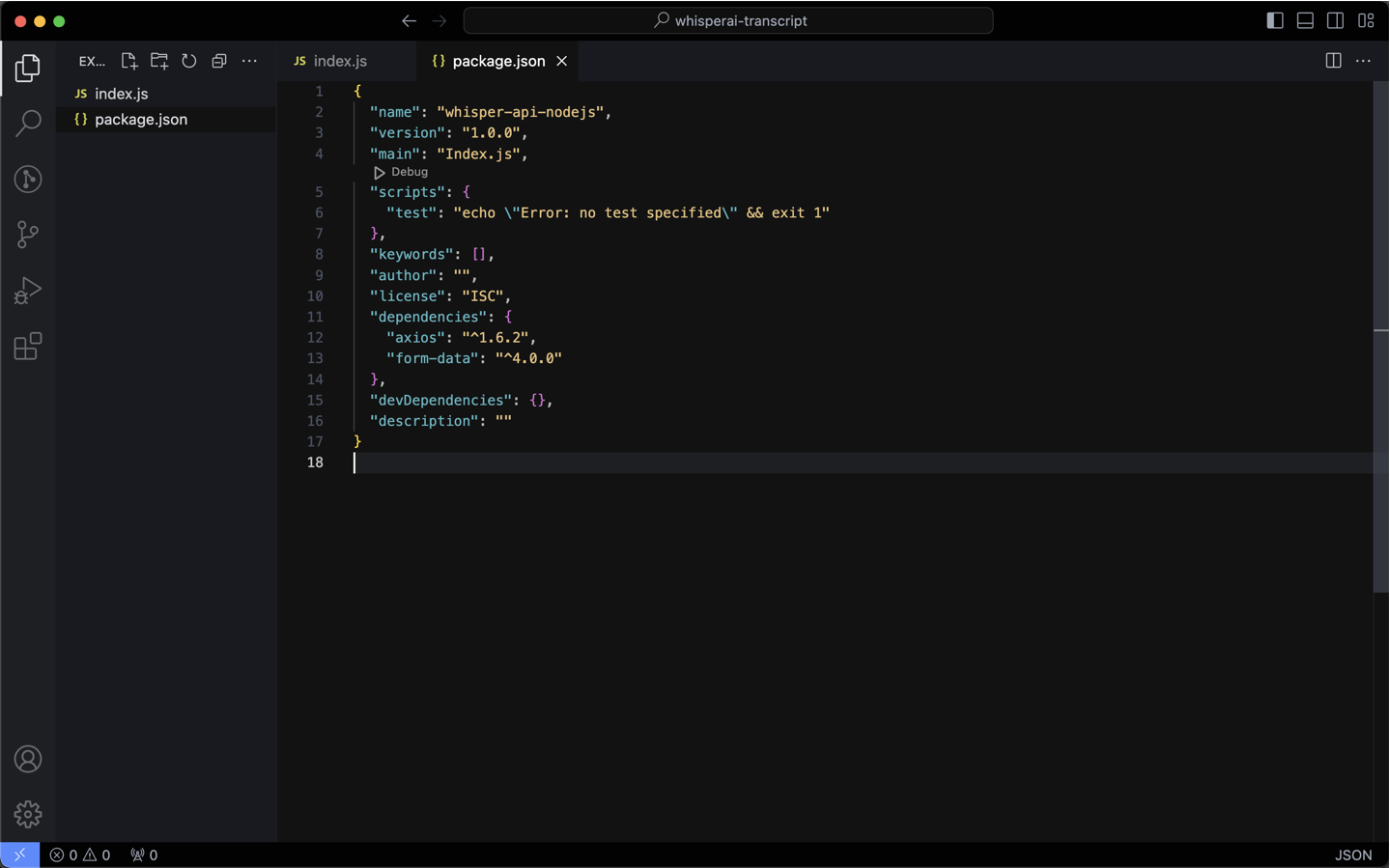

Then, create another file named package.json and paste the following:

{

"name": "whisper-api-nodejs",

"version": "1.0.0",

"main": "Index.js",

"scripts": {

"test": "echo \"Error: no test specified\" && exit 1"

},

"keywords": [],

"author": "",

"license": "ISC",

"dependencies": {

"axios": "^1.6.2",

"form-data": "^4.0.0"

},

"devDependencies": {},

"description": ""

}

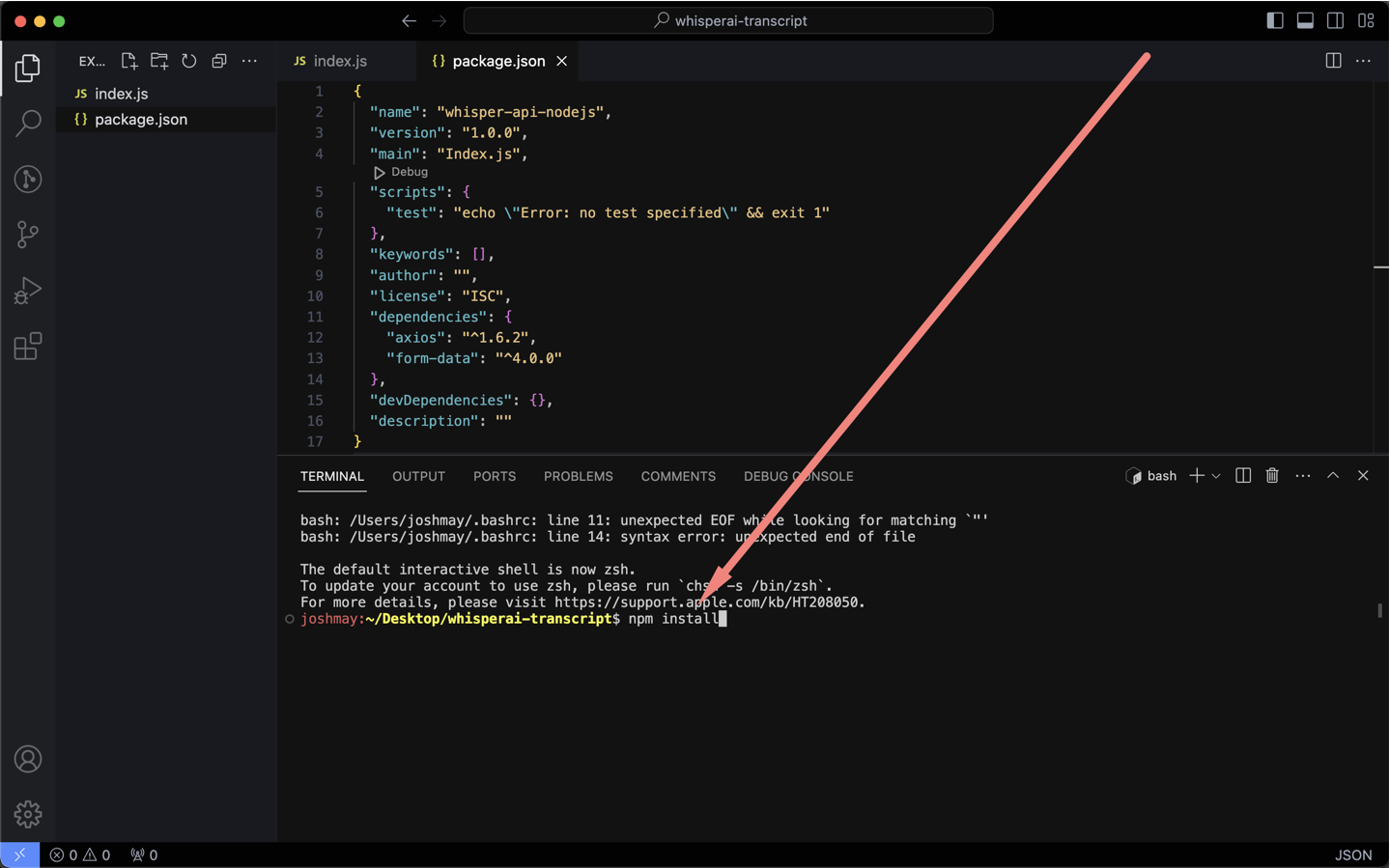

Run “npm install” in the VS Code terminal to install the necessary packages.

Now, place the audio file you want to transcribe in the project folder and update the filePath in index.js accordingly.

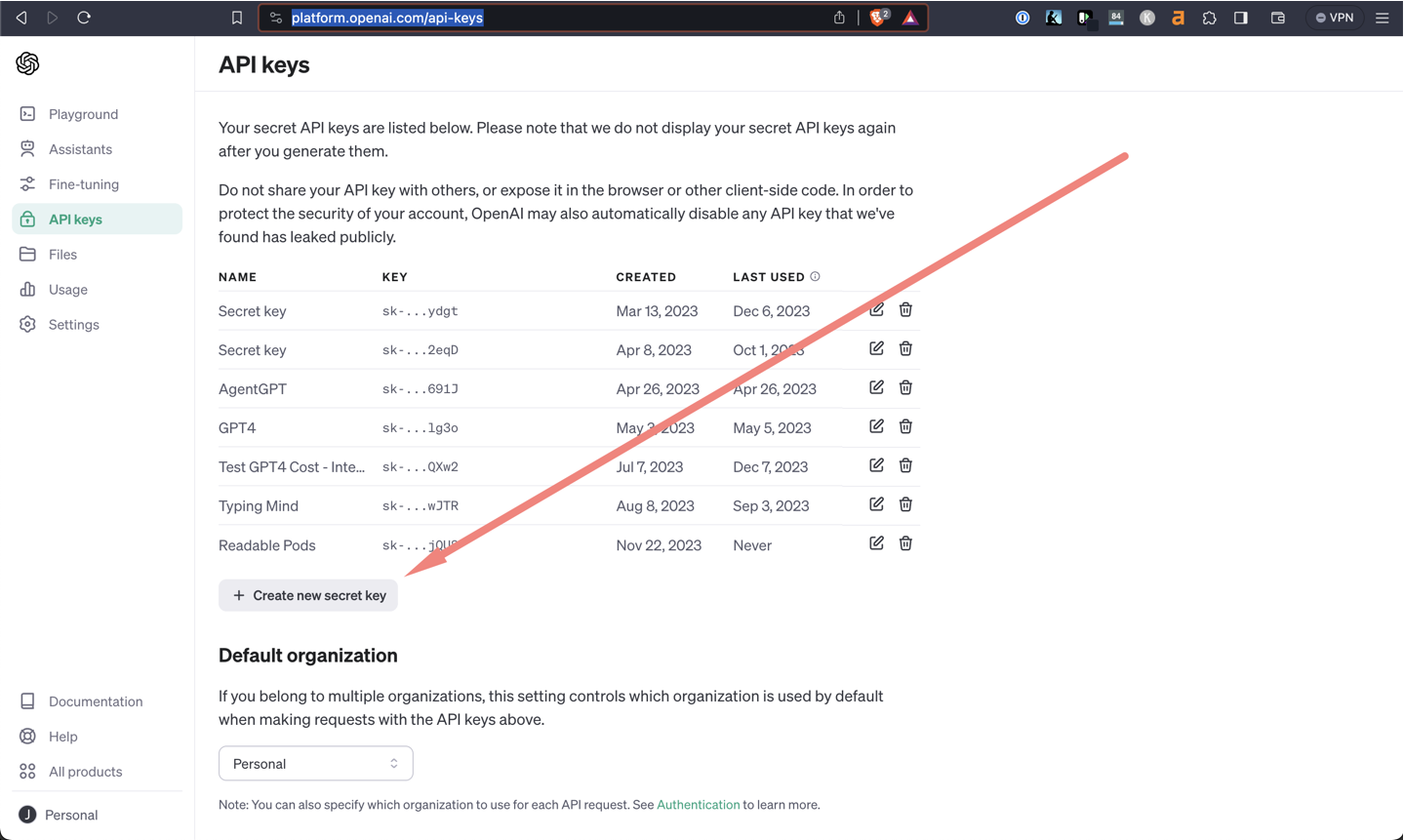

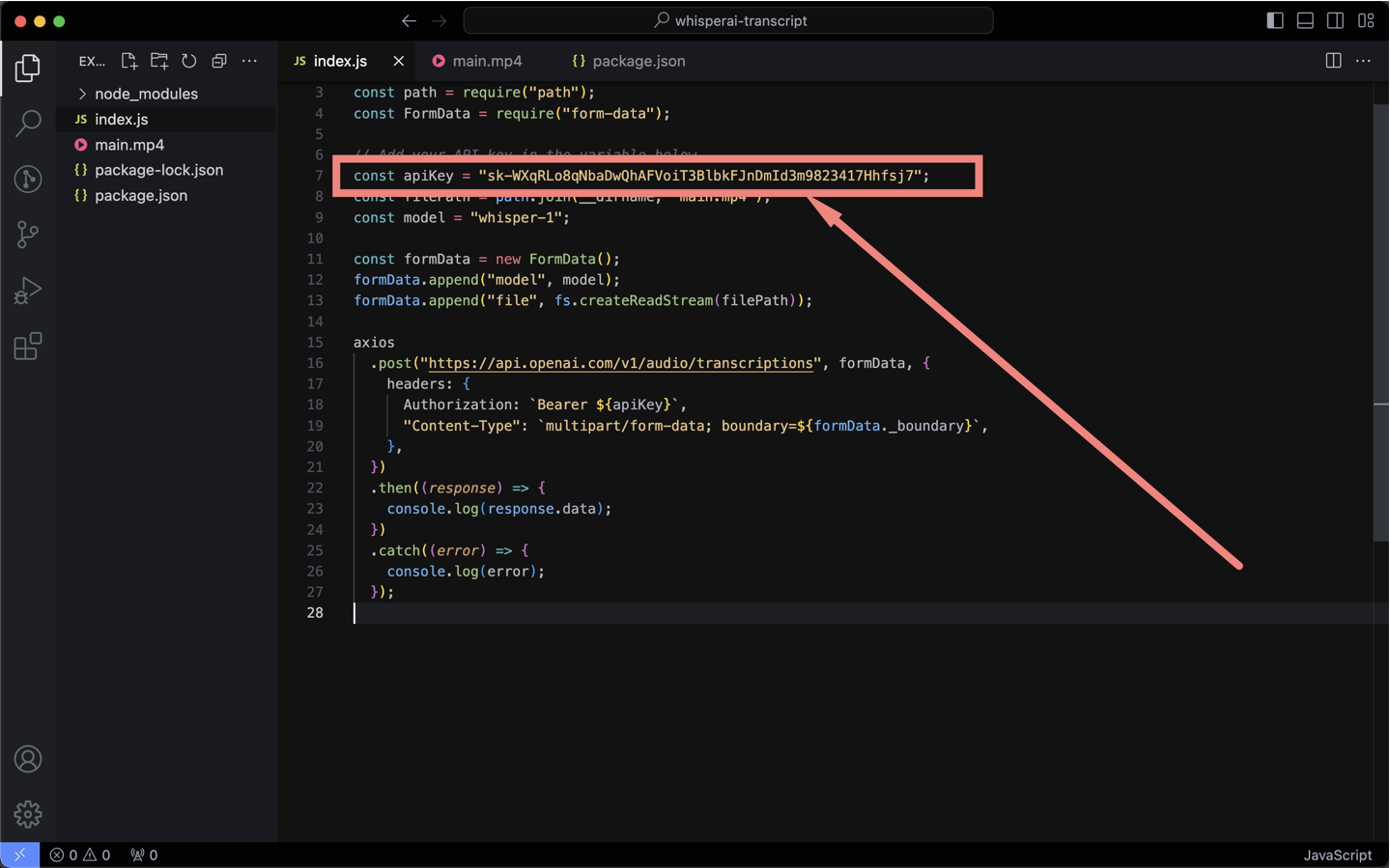

Next, sign up for an OpenAI account and obtain your API key, which you'll insert into the “apiKey” variable in the code. If this is your first time using an OpenAI API key, they are found in the settings page here - https://platform.openai.com/api-keys.

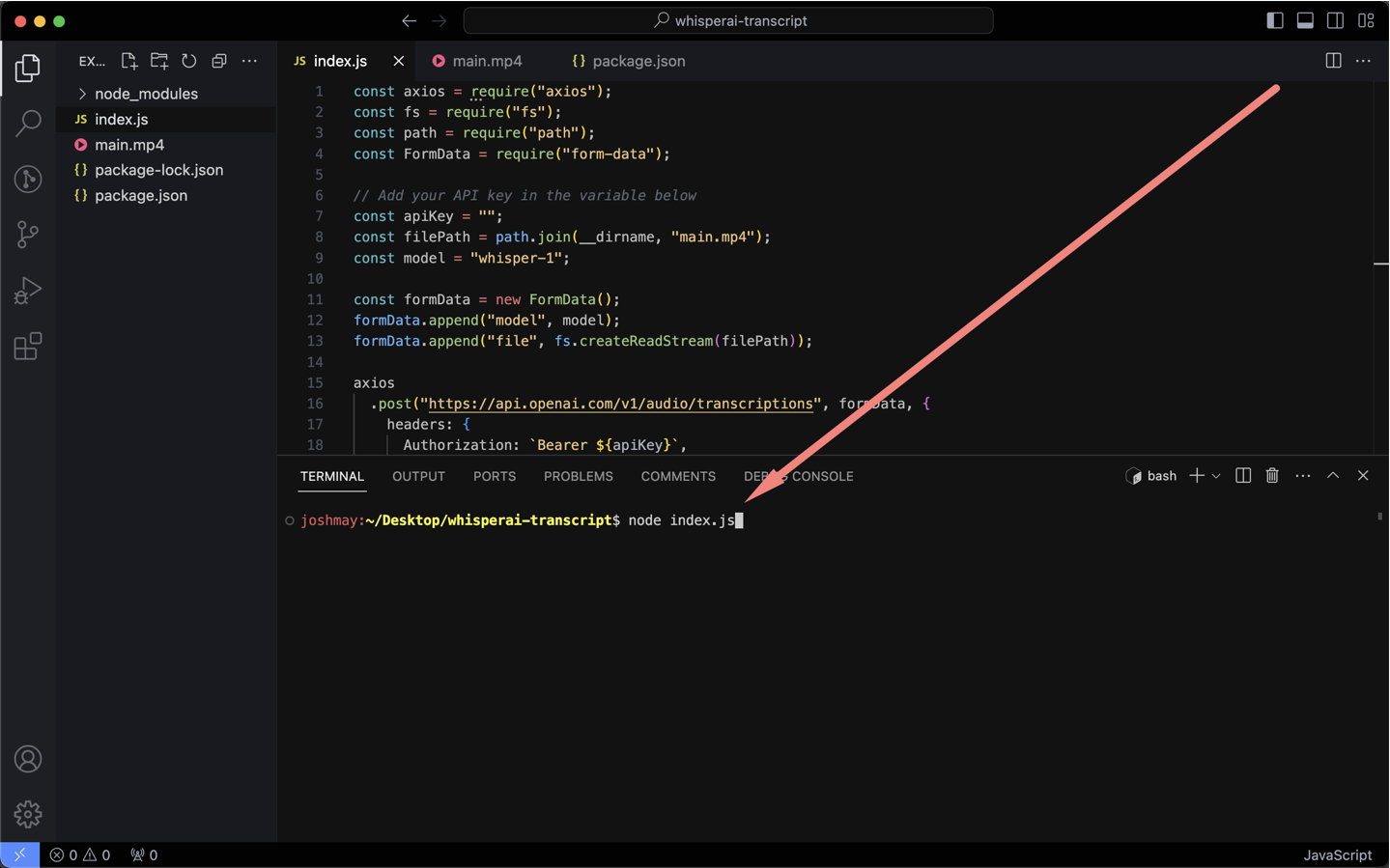

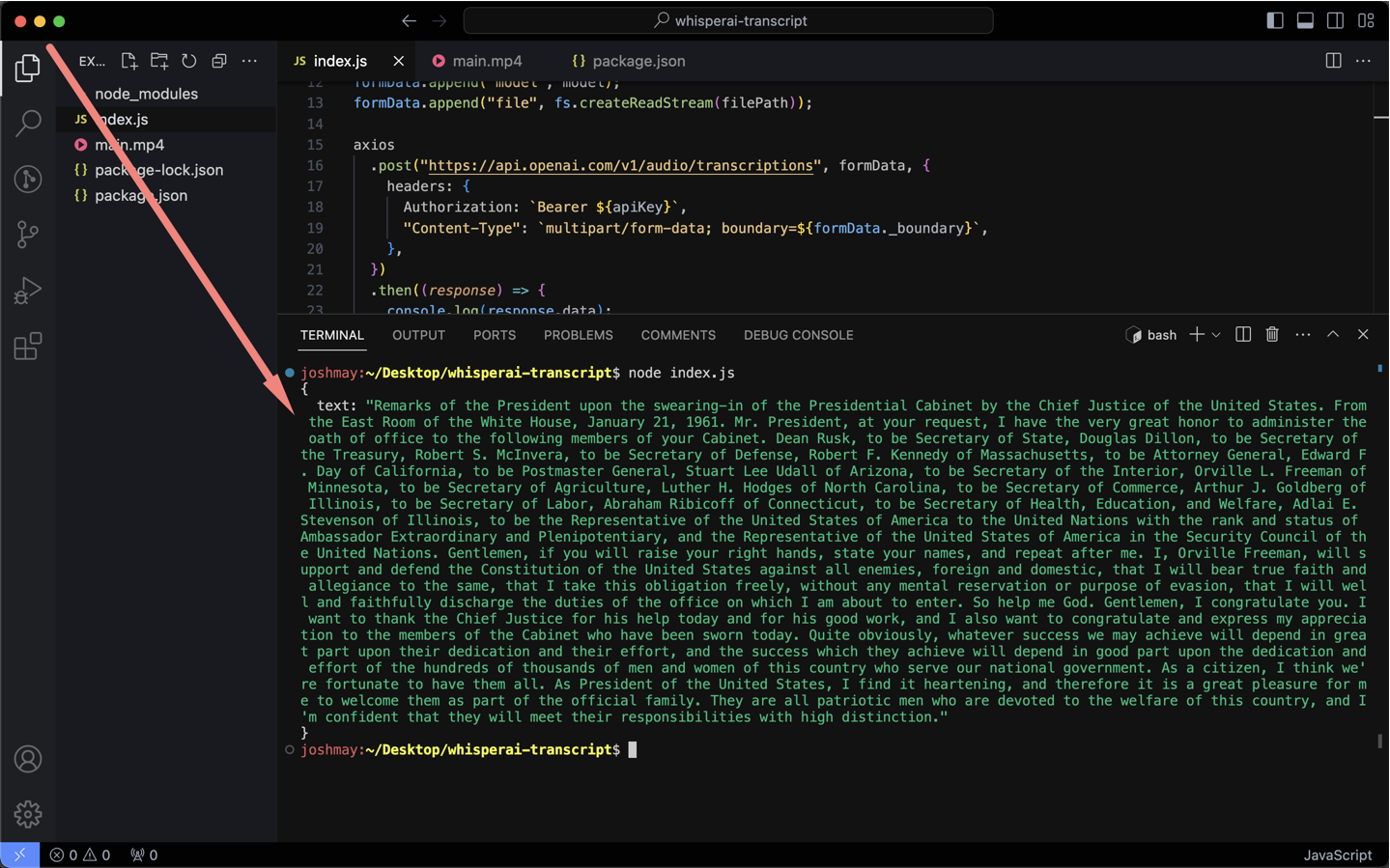

To execute the transcription, copy and paste “node index.js” in the terminal and hit enter. The script will then process and output the transcript.

If you prefer to download the code from GitHub you can find it here.

Note: The API can handle files up to 25 MB, equivalent to about 200 minutes of audio. If your file exceeds this limit, consider using the PyDub Python library to split the audio file. Check OpenAI's documentation here for guidance on this.

If you’re encountering any issues leave a comment or reach out. Or if you prefer a video guide let me know and I can make one. :)

Step 2: Summarizing Your Transcript Using the ChatGPT API

After obtaining the transcript, we'll leverage the OpenAI GPT 4 Turbo API for summarization.

This endpoint can process up to 128,000 tokens, roughly 32,000 words, or about 3 hours of podcast audio (depending on how fast you talk).

Start by creating a new folder on your desktop (name it as you wish).

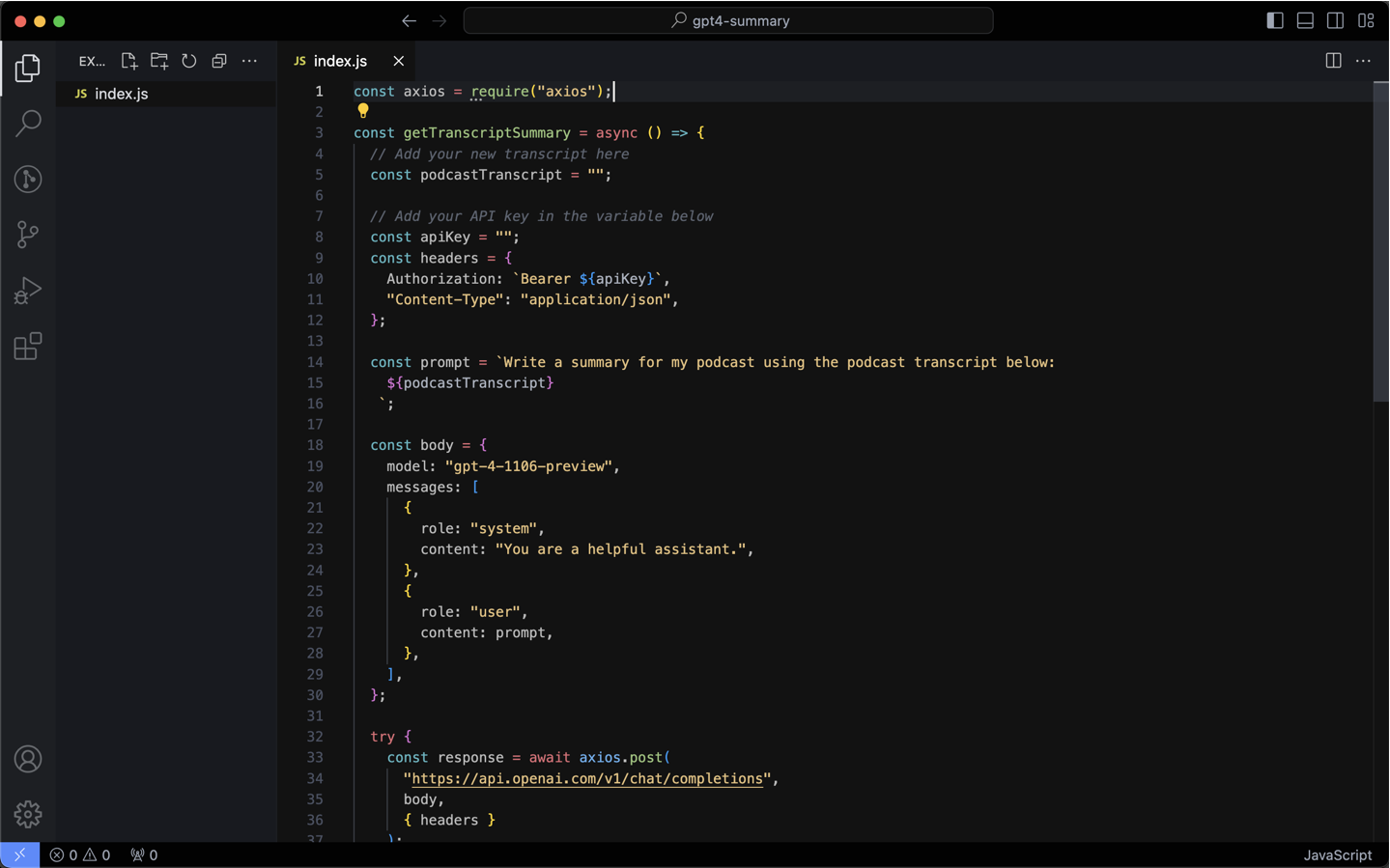

Open this folder in VS Code and create a file named “index.js”. Insert the following code:

const axios = require("axios");

const getTranscriptSummary = async () => {

// Add your new transcript here

const podcastTranscript = "";

// Add your API key in the variable below

const apiKey = "";

const headers = {

Authorization: `Bearer ${apiKey}`,

"Content-Type": "application/json",

};

const prompt = `Write a summary for my podcast using the podcast transcript below:

${podcastTranscript}

`;

const body = {

model: "gpt-4-1106-preview",

messages: [

{

role: "system",

content: "You are a helpful assistant.",

},

{

role: "user",

content: prompt,

},

],

};

try {

const response = await axios.post(

"https://api.openai.com/v1/chat/completions",

body,

{ headers }

);

if (response.status !== 200) {

console.log(response.status);

}

const responseContent = response.data.choices[0].message.content.trim();

console.log(responseContent);

} catch (error) {

console.log(error);

}

};

getTranscriptSummary();

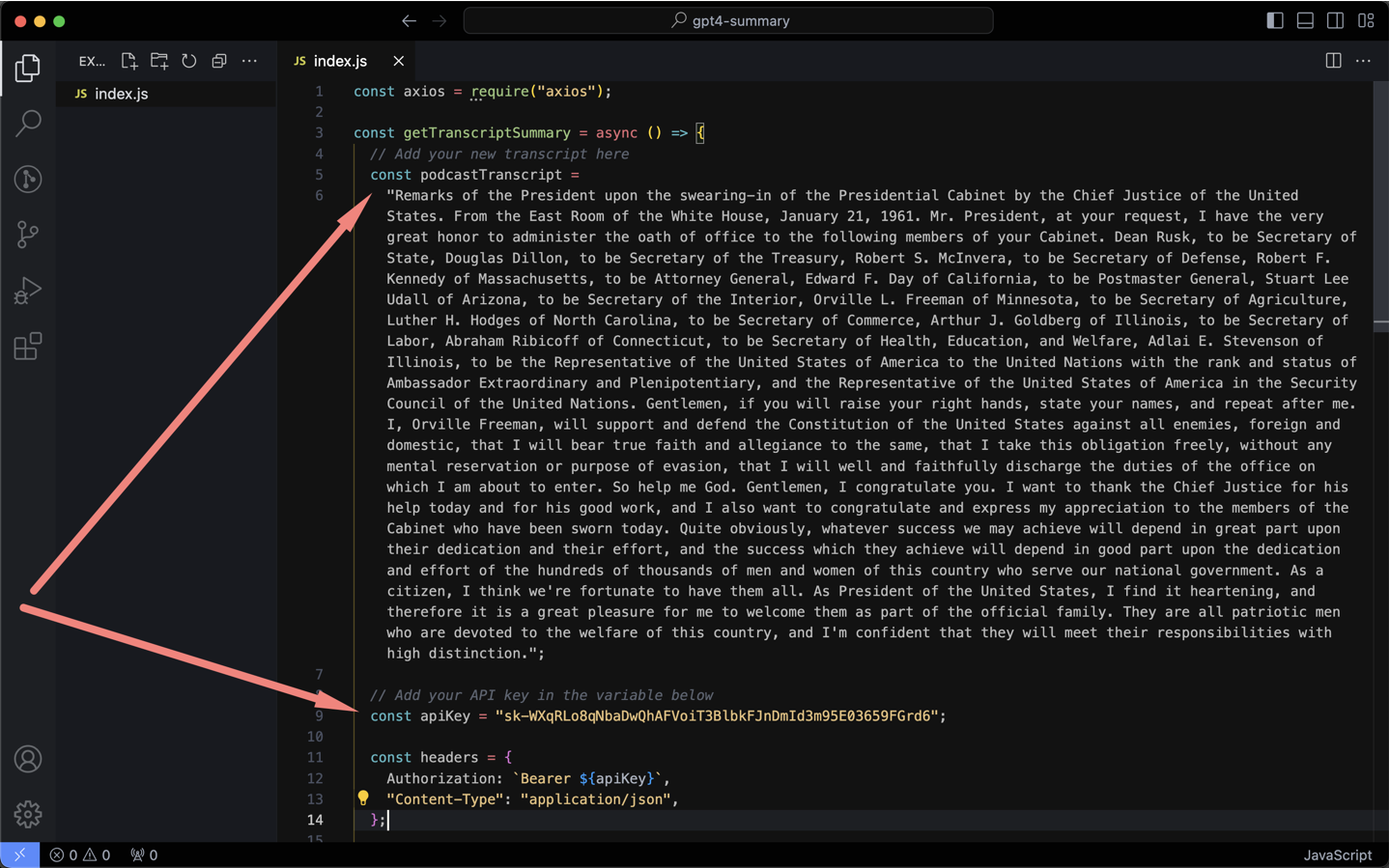

Next, add your OpenAI API key and insert your transcript into the “podcastTranscript” variable.

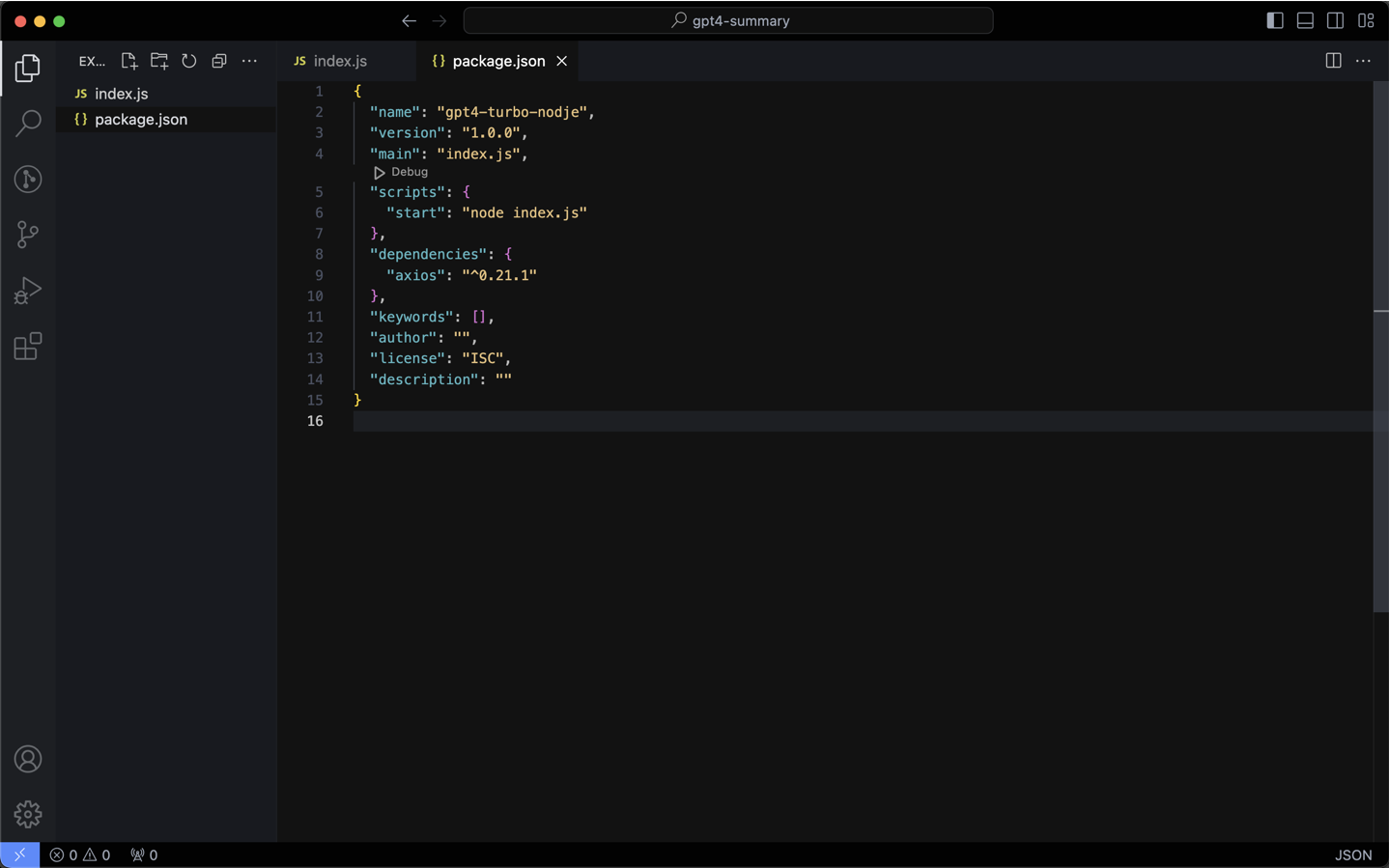

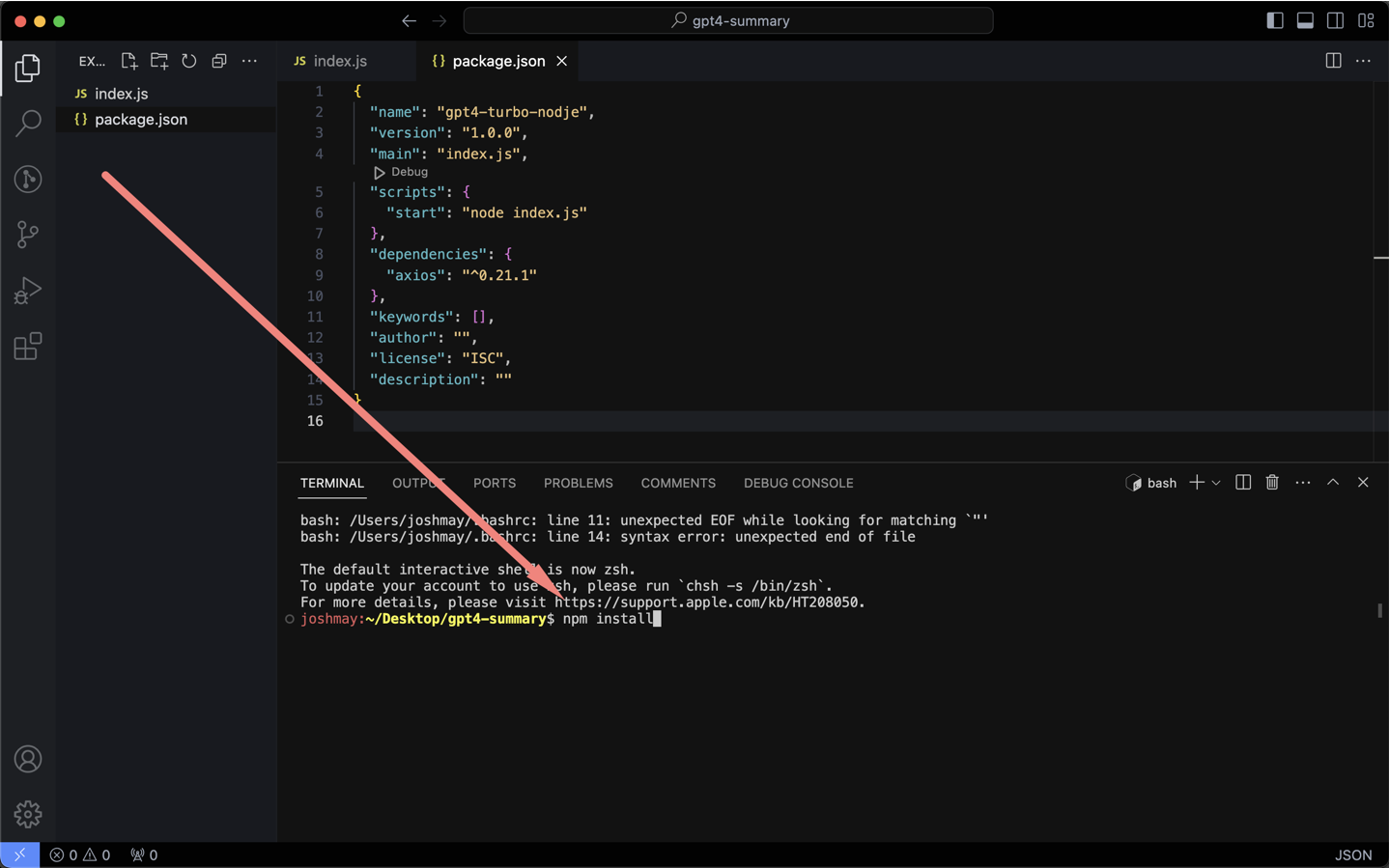

Now, create a package.json file and input the following:

{

"name": "gpt4-turbo-nodje",

"version": "1.0.0",

"main": "index.js",

"scripts": {

"start": "node index.js"

},

"dependencies": {

"axios": "^0.21.1"

},

"keywords": [],

"author": "",

"license": "ISC",

"description": ""

}

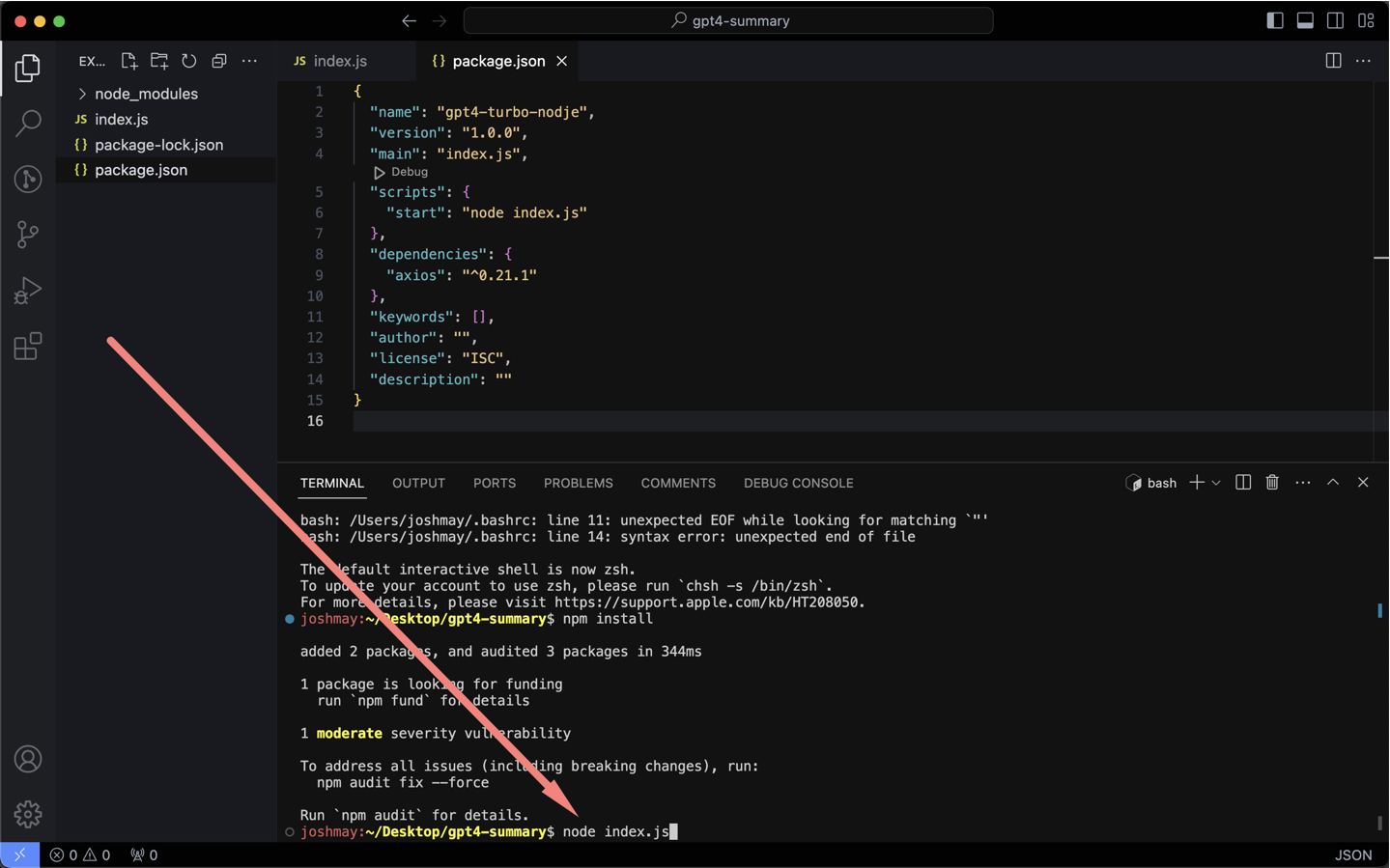

In VS Code's terminal, run “npm install” to set up the necessary package.

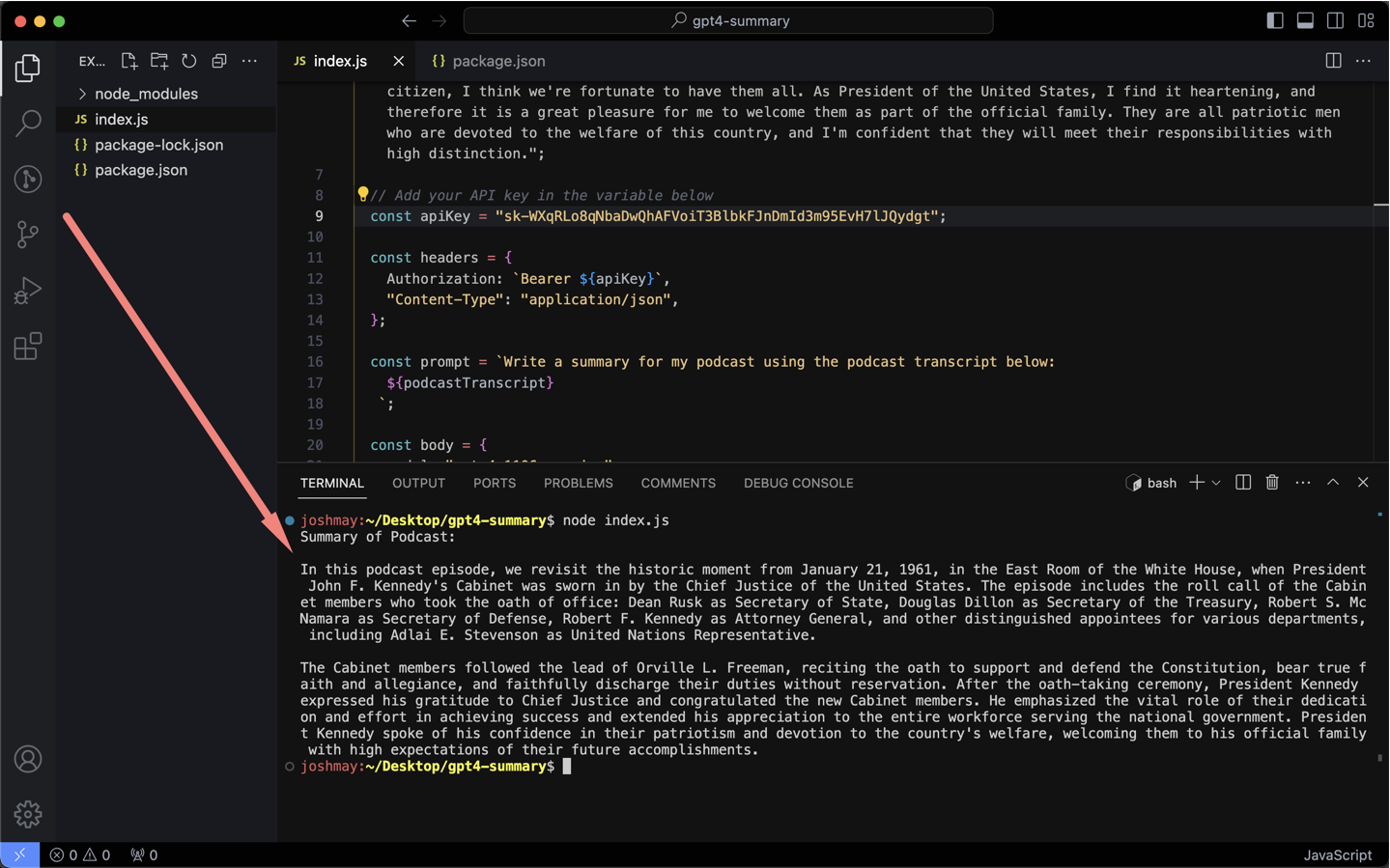

Finally, execute “node index.js” in the terminal to run the script and get the summary.

For specific tones or styles, adjust the prompt variable in the script.

If you want to download the file from GitHub, you can find it here.

Using a Third-Party Tool for Hassle-Free Summarization

For those who don’t want to do this manually, check out our solution at - www.shownotesgenerator.com/app. We offer two free hours of audio-to-show-notes conversions, which includes the summary, timestamps, and transcript.

This is ideal for podcasters seeking quick results without delving into the technical details.

Wrapping Up

That’s it!

Let me know your thoughts on this process. Did it work for you, or did you hit any issues?

Additionally, if there's interest in a video tutorial to complement this guide, let me know. :)

Happy podcasting!

FAQs About Podcast Summaries and OpenAI

What is the Whisper AI API?

OpenAI's Whisper API is a powerful tool designed for converting spoken language into written text.

It leverages advanced machine learning techniques to provide accurate and efficient transcription services.

This API is particularly adept at understanding and transcribing audio files, recognizing different accents, dialects, and even handling noisy backgrounds.

The Whisper API is ideal for podcasters, journalists, and anyone looking to convert audio recordings into high-quality, readable transcripts.

Why do summaries matter for podcasting?

Summaries play a crucial role in the world of podcasting for several reasons:

Time Efficiency: Summaries provide a quick overview of the podcast content, making it easier for potential listeners to decide if an episode aligns with their interests.

SEO Optimization: Well-crafted summaries, rich in keywords, can improve the searchability of your podcast on search engines and podcast platforms, attracting a wider audience.

Can the Whisper AI API recognize different languages and accents?

Yes, the Whisper AI API is designed to recognize and transcribe speech in a variety of languages and accents.

Developed by OpenAI, Whisper AI employs advanced machine learning algorithms trained on a diverse dataset, which includes multiple languages and accents from around the world. This capability enables it to handle transcriptions in different linguistic contexts with a high degree of accuracy.

However, it's important to note that the level of accuracy can vary depending on the language, the clarity of speech, the presence of background noise, and the complexity of the accent.

What are the limitations of the ChatGPT API in summarizing podcasts?

The ChatGPT API, while powerful in generating text-based summaries, does have certain limitations when it comes to summarizing podcasts:

Token Limit: The API has a maximum token limit per request (e.g., 128,000 tokens for GPT-4 Turbo), which may restrict the length of the summary for longer podcasts.

Context Understanding: While ChatGPT is adept at language processing, it may not fully grasp the nuances, tone, and subtleties of a podcast's content, potentially leading to less nuanced summaries.

Real-Time Processing: The API is not designed for real-time transcription or summarization, making it less suitable for live podcasting scenarios.

Dependency on Quality of Transcription: The quality of the summary heavily depends on the accuracy of the transcription provided by tools like Whisper AI. Inaccuracies in transcription can lead to errors or omissions in the summary.

Customization and Style Adaptation: While ChatGPT can be prompted to follow certain styles or tones, it might not always match the unique voice or style of the podcast host or content.

Are there any costs associated with using the ChatGPT and Whisper AI APIs?

Yes, there are costs associated with using the ChatGPT and Whisper AI APIs, which are provided by OpenAI. The pricing structure for these APIs typically involves a usage-based model, but the specific details can vary.

Here's a general overview:

ChatGPT API:

- GPT4 Turbo costs $0.01 / 1K tokens and $0.03 / 1K tokens.

Whisper AI API:

- Whisper AI API costs $0.006 / minute

For the most accurate and current pricing information, it's best to refer directly to OpenAI's website.

How can I improve the accuracy of the transcripts and summaries generated?

Improving the accuracy of transcripts and summaries generated by tools like Whisper AI and ChatGPT involves a combination of good recording practices, clear audio, and effective use of the APIs.

Here are some strategies:

Optimize Recording Quality:

Use high-quality recording equipment to capture clear audio. Minimize background noise and echo during recording sessions. Ensure speakers talk clearly and at a moderate pace. Pre-process Audio Files:

Review and Edit Transcripts:

Manually review the initial transcripts for errors or misinterpretations, especially for names, technical terms, and numbers.

Prompts: Be specific in your prompts to ChatGPT about the style, tone, and length of summary you desire. Highlight key themes or topics that must be included in the summary.

Article by

Josh MayHey I'm Josh, one of the guys behind Show Notes Generator. I'm passionate about technology, podcasting, and storytelling.